The advent of Multimodal Large Language Models (MLLM) has ushered in a new era of mobile device agents, capable of understanding and interacting with the world through text, images, and voice. These agents mark a significant advancement over traditional AI, providing a richer and more intuitive way for users to interact with their devices. By leveraging MLLM, these agents can process and synthesize vast amounts of information from various modalities, enabling them to offer personalized assistance and enhance user experiences in ways previously unimaginable.

These agents are powered by state-of-the-art machine learning techniques and advanced natural language processing capabilities, allowing them to understand and generate human-like text, as well as interpret visual and auditory data with remarkable accuracy. From recognizing objects and scenes in images to understanding spoken commands and analyzing text sentiment, these multimodal agents are equipped to handle a wide range of inputs seamlessly. The potential of this technology is vast, offering more sophisticated and contextually aware services, such as virtual assistants attuned to human emotions and educational tools that adapt to individual learning styles. They also have the potential to revolutionize accessibility, making technology more approachable across language and sensory barriers.

In this article, we will be talking about Mobile-Agents, an autonomous multi-modal device agent that first leverages the ability of visual perception tools to identify and locate the visual and textual elements with a mobile application’s front-end interface accurately. Using this perceived vision context, the Mobile-Agent framework plans and decomposes the complex operation task autonomously, and navigates through the mobile apps through step by step operations. The Mobile-Agent framework differs from existing solutions since it does not rely on mobile system metadata or XML files of the mobile applications, allowing room for enhanced adaptability across diverse mobile operating environments in a vision centric way. The approach followed by the Mobile-Agent framework eliminates the requirement for system-specific customizations resulting in enhanced performance, and lower computing requirements.

In the fast-paced world of mobile technology, a pioneering concept emerges as a standout: Large Language Models, especially Multimodal Large Language Models or MLLMs capable of generating a wide array of text, images, videos, and speech across different languages. The rapid development of MLLM frameworks in the past few years has given rise to a new and powerful application of MLLMs: autonomous mobile agents. Autonomous mobile agents are software entities that act, move, and function independently, without needing direct human commands, designed to traverse networks or devices to accomplish tasks, collect information, or solve problems.

Mobile Agents are designed to operate the user’s mobile device on the bases of the user instructions and the screen visuals, a task that requires the agents to possess both semantic understanding and visual perception capabilities. However, existing mobile agents are far from perfect since they are based on multimodal large language models, and even the current state of the art MLLM frameworks including GPT-4V lack visual perception abilities required to serve as an efficient mobile agent. Furthermore, although existing frameworks can generate effective operations, they struggle to locate the position of these operations accurately on the screen, limiting the applications and ability of mobile agents to operate on mobile devices.

To tackle this issue, some frameworks opted to leverage the user interface layout files to assist the GPT-4V or other MLLMs with localization capabilities, with some frameworks managing to extract actionable positions on the screen by accessing the XML files of the application whereas other frameworks opted to use the HTML code from the web applications. As it can be seen, a majority of these frameworks rely on accessing underlying and local application files, rendering the method almost ineffective if the framework cannot access these files. To address this issue and eliminate the dependency of local agents on underlying files on the localization methods, developers have worked on Mobile-Agent, an autonomous mobile agent with impressive visual perception capabilities. Using its visual perception module, the Mobile-Agent framework uses screenshots from the mobile device to locate operations accurately. The visual perception module houses OCR and detection models that are responsible for identifying text within the screen and describing the content within a specific region of the mobile screen. The Mobile-Agent framework employs carefully crafted prompts and facilitates efficient interaction between the tools and the agents, thus automating the mobile device operations.

Furthermore, the Mobile-Agents framework aims to leverage the contextual capabilities of state of the art MLLM frameworks like GPT-4V to achieve self-planning capabilities that allows the model to plan tasks based on the operation history, user instructions and screenshots holistically. To further enhance the agent’s ability to identify incomplete instructions and wrong operations, the Mobile-Agent framework introduces a self-reflection method. Under the guidance of carefully crafted prompts, the agent reflects on incorrect and invalid operations consistently, and halts the operations once the task or instruction has been completed.

Overall, the contributions of the Mobile-Agent framework can be summarized as follows:

At its core, the Mobile-Agent framework consists of a state of the art Multimodal Large Language Model, the GPT-4V, a text detection module used for text localization tasks. Along with GPT-4V, Mobile-Agent also employs an icon detection module for icon localization.

As mentioned earlier, the GPT-4V MLLM delivers satisfactory results for instructions and screenshots, but it fails to output the location effectively where the operations take place. Owing to this limitation, the Mobile-Agent framework implementing the GPT-4V model needs to rely on external tools to assist with operation localization, thus facilitating the operations output on the mobile screen.

The Mobile-Agent framework implements a OCR tool to detect the position of the corresponding text on the screen whenever the agent needs to tap on a specific text displayed on the mobile screen. There are three unique text localization scenarios.

Issue: The OCR fails to detect the specified text, which may occur in complex images or due to OCR limitations.

Response: Instruct the agent to either:

Reasoning: This flexibility is necessary to manage the occasional inaccuracies or hallucinations of GPT-4V, ensuring the agent can still proceed effectively.

Operation: Automatically generate an action to click on the center coordinates of the detected text box.

Justification: With only one instance detected, the likelihood of correct identification is high, making it efficient to proceed with a direct action.

Assessment: First, evaluate the number of detected instances:

Many Instances : Indicates a screen cluttered with similar content, complicating the selection process.

Action: Request the agent to reselect the text, aiming to refine the selection or adjust the search parameters.

Few Instances: A manageable number of detections allows for a more nuanced approach.

Action: Crop the regions around these instances, expanding the text detection boxes outward to capture additional context. This expansion ensures that more information is preserved, aiding in decision-making.

Next Step: Draw detection boxes on the cropped images and present them to the agent. This visual assistance helps the agent in deciding which instance to interact with, based on contextual clues or task requirements.

This structured approach optimizes the interaction between OCR results and agent operations, enhancing the system's reliability and adaptability in handling text-based tasks across various scenarios. The entire process is demonstrated in the following image.

The Mobile-Agent framework implements an icon detection tool to locate the position of an icon when the agent needs to click on it on the mobile screen. To be more specific, the framework first requests the agent to provide specific attributes of the image including shape and color, and then the framework implements the Grounding DINO method with the prompt icon to identify all the icons contained within the screenshot. Finally, Mobile-Agent employs the CLIP framework to calculate the similarity between the description of the click region, and calculates the similarity between the deleted icons, and selects the region with the highest similarity for a click.

To translate the actions into operations on the screen by the agents, the Mobile-Agent framework defines 8 different operations.

Every step of the operation is executed iteratively by the framework, and before the beginning of each iteration, the user is required to provide an input instruction, and the Mobile-Agent model uses the instruction to generate a system prompt for the entire process. Furthermore, before the start of every iteration, the framework captures a screenshot and feeds it to the agent. The agent then observes the screenshot, operation history, and system prompts to output the next step of the operations.

During its operations, the agent might face errors that prevent it from successfully executing a command. To enhance the instruction fulfillment rate, a self-evaluation approach has been implemented, activating under two specific circumstances. Initially, if the agent executes a flawed or invalid action that halts progress, such as when it recognizes the screenshot remains unchanged post-operation or displays an incorrect page, it will be directed to consider alternative actions or adjust the existing operation's parameters. Secondly, the agent might miss some elements of a complex directive. Once the agent has executed a series of actions based on its initial plan, it will be prompted to review its action sequence, the latest screenshot, and the user's directive to assess whether the task has been completed. If discrepancies are found, the agent is tasked to autonomously generate new actions to fulfill the directive.

To evaluate its abilities comprehensively, the Mobile-Agent framework introduces the Mobile-Eval benchmark consisting of 10 commonly used applications, and designs three instructions for each application. The first operation is straightforward, and only covers basic application operations whereas the second operation is a bit more complex than the first as it has some additional requirements. Finally, the third operation is the most complex of them all since it contains abstract user instruction with the user not explicitly specifying which app to use or what operation to perform.

Moving along, to assess the performance from different perspectives, the Mobile-Agent framework designs and implements 4 different metrics.

The results are demonstrated in the following figure.

Initially, for the three given tasks, the Mobile-Agent attained completion rates of 91%, 82%, and 82%, respectively. While not all tasks were executed flawlessly, the achievement rates for each category of task surpassed 90%. Furthermore, the PS metric reveals that the Mobile-Agent consistently demonstrates a high likelihood of executing accurate actions for the three tasks, with success rates around 80%. Additionally, according to the RE metric, the Mobile-Agent exhibits an 80% efficiency in performing operations at a level comparable to human optimality. These outcomes collectively underscore the Mobile-Agent's proficiency as a mobile device assistant.

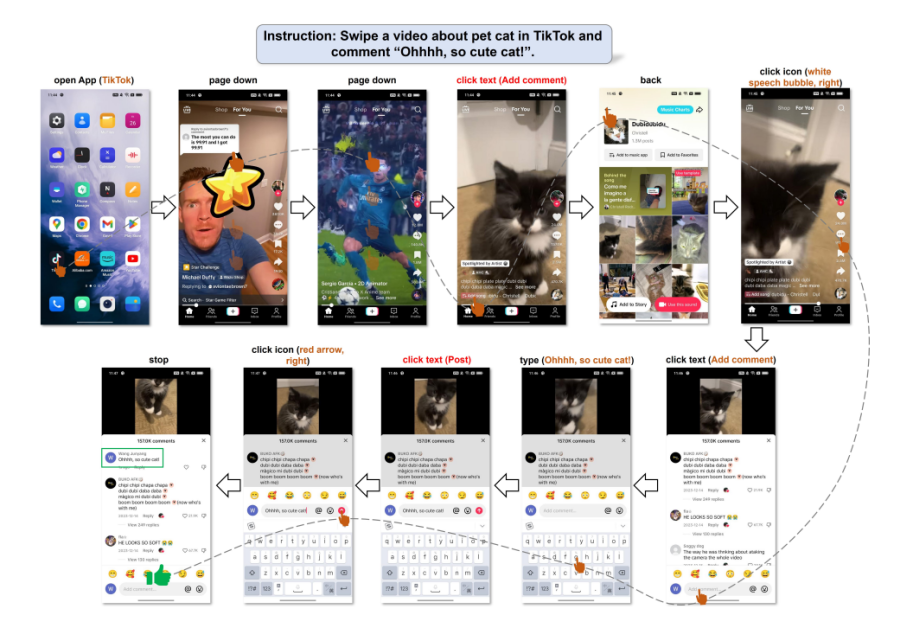

The following figure illustrates the Mobile-Agent's capability to grasp user commands and independently orchestrate its actions. Even in the absence of explicit operation details in the instructions, the Mobile-Agent adeptly interpreted the user's needs, converting them into actionable tasks. Following this understanding, the agent executed the instructions via a systematic planning process.

In this article we have talked about Mobile-Agents, a multi-modal autonomous device agent that initially utilizes visual perception technologies to precisely detect and pinpoint both visual and textual components within the interface of a mobile application. With this visual context in mind, the Mobile-Agent framework autonomously outlines and breaks down the intricate tasks into manageable actions, smoothly navigating through mobile applications step by step. This framework stands out from existing methodologies as it does not depend on the mobile system's metadata or the mobile apps' XML files, thereby facilitating greater flexibility across various mobile operating systems with a focus on visual-centric processing. The strategy employed by the Mobile-Agent framework obviates the need for system-specific adaptations, leading to improved efficiency and reduced computational demands.